Segmentation, Instance Tracking, and data Fusion Using multi-SEnsor imagery (SIT-FUSE)

Principal Investigator

Nick LaHayeEmail: nicholas.j.lahaye@jpl.nasa.gov

Please visit our public GitHub and our GitBook documentation

Please see our publicly available multi-sensor fire and smoke dataset here.

Description

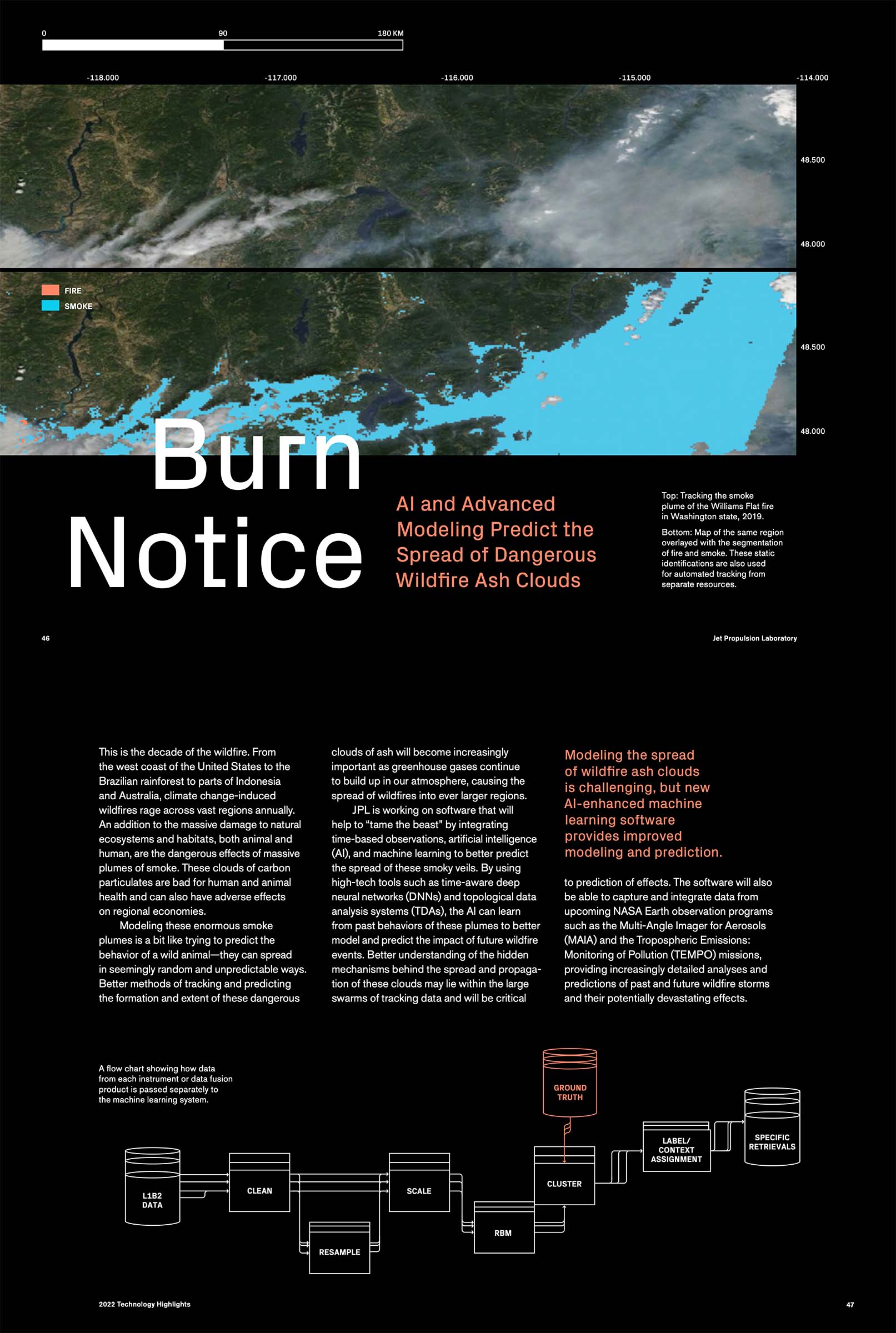

Accurate detection, segmentation and tracking of geophysical objects (fire, smoke, algal blooms) at high spatiotemporal resolution are essential to better understand and therefore model complicated Earth Systems interactions. Segmentation, Instance Tracking, and data Fusion Using multi-SEnsor imagery (SIT-FUSE) provides a self-supervised deep learning framework that allows users to segment and track instances of objects in single and multi-sensor scenes from orbital and suborbital instruments. By leveraging self-supervised learning, subject matter experts are kept in the loop while removing the need for per-instrument detection algorithms along with the labor intensive and sometimes error prone task of manual per-pixel labeling.

The framework can be used in domains where pre-existing labels exist, but it was developed to also perform well in low- and no-label environments. Leveraging SIT-FUSE for this task is beneficial as it:

- Detects anomalous observations from instruments with varying spatial and spectral resolutions.

- Removes the need for the labor-intensive and sometimes error-prone task of manual per-pixel label set generation while keeping the subject matter experts in the loop.

- Creates a sensor web of pre-existing instruments by incorporating observations from multiple historic and current orbital and suborbital missions - and can easily add new missions when they come online. The architectural approach within the framework is to generate encodings for each instrument type separately, allowing for the use of instruments as a system of systems. This provides the advantage of being able to leverage subsets of instruments where available, and modularly adding on fusion layers, temporal components, and layers for downstream tasks, at will.

Current applications include wildfire and smoke plume segmentation and tracking, oil palm plantation segmentation, harmful algal bloom severity mapping and speciation, and dust plume segmentation, characterization, and tracking.

Select Publications

FIRE-D: NASA-centric Remote Sensing of Wildfire

Y. Chen, N. LaHaye, J. W. Choi, Z. Zhen, P. E. Davis, H. Lee, M. Parashar, Y. R. Gel

In preparation

Self-Supervised Contrastive Learning for Wildfire Detection: Utility and Limitations

J. Choi, N. LaHaye, Y. Chen, H. Lee, Y. Gel

Advances in Machine Learning and Image Analysis GeoAI, Elsevier, 2024 [link]

A Quantitative Validation of Multi-Modal Image Fusion and Segmentation for Object Detection and Tracking

N. LaHaye, M.J. Garay, B. Bue, H. El-Askary, E. Linstead

Remote Sens. 2021, 13, 2364. [link]

Multi-modal object tracking and image fusion with unsupervised deep learning

N. LaHaye, J. Ott, M. J. Garay, H. El-Askary, and E. Linstead

IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 12, no. 8, pp. 3056-3066, Aug. 2019 [link]