Barefoot Rover

Principal Investigator

Jack LightholderEmail: jack.a.lightholder@jpl.nasa.gov

Please visit our public GitHub repository for more information.

Open source data from this project can be found in our Zenodo community.

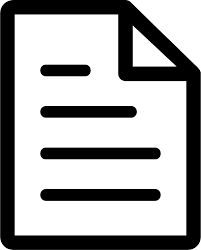

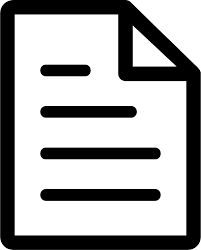

In this work, we demonstrate an instrumented wheel concept which utilizes a 2D pressure grid, an electrochemical impedance spectroscopy (EIS) sensor and machine learning to extract meaningful metrics from the interaction between the wheel and surface terrain. These include continuous slip/skid estimation, balance, and sharpness for engineering applications. Estimates of surface hydration, texture, terrain patterns, and regolith physical properties such as cohesion and angle of internal friction are additionally calculated for science applications. Traditional systems rely on post-processing of visual images and vehicle telemetry to estimate these metrics. Through in-situ sensing, these metrics can be calculated in near real time and made available to onboard science and engineering autonomy applications. This work aims to provide a deployable system for future planetary exploration missions to increase science and engineering capabilities through increased knowledge of the terrain.

Planetary rovers rely heavily on visual images and telemetry, such as motor current, to navigate while maintaining the health and safety of the vehicle. Rover motion activities are planned in advance by operations teams and then carried out by the vehicle. Thresholds, set by operators during the activity planning phase, are monitored by the vehicle to ensure they remain within bounds. These include metrics like rate of slip between the wheel and the surface, vehicle pitch/tilt/roll and drive motor currents. While some of these parameters may be directly measured, others like slip must be inferred such as by external, introspective camera systems extending beyond the wheel itself. Complex models, such as ARTEMIS, infer slippage based on deviations from the expected final rover location and orientation. Knowledge of these values can enable more efficient vehicle traverses and further inform scientists on local terrain characteristics. Past attempts have been made to derive terrain mechanical properties based on rover telemetry and visual data and applied some ML-based proof-of-principle systems. However, all of these efforts are characterized by inferred interactions that are only available through post-hoc ground analysis. Through instrumentation embedded directly in the wheel, these metrics can be directly computed and made available to vehicle science and navigation systems in near real time.

The classification of terrrain type can critically inform traversibility as well as geologic sciences. This classification is traditionally performed using accelerometers and vibrational analysis, identifying frequency distributions for each terrain type. Such 1D datasets can infer certain terrain types in fast-moving vehicles (e.g. self-driving cars), but for the cautious speeds typical of explorative robots the vibrational input is weak and unreliable. Further, vibrational analysis cannot alone provide detailed science estimates of surface properties like the presence/thickness of crusts, and rough surface morphology. A limited set of embedded pressure sensors have been used to asses wheel contact and load distribution, and estimate drawbar pull, however these do not provide the full contact geometry.

A 2D grid sensor instead supplies a pressure/conductivity “image” of the surface/wheel interface, allowing estimation of surface properties and directly measuring several key contact parameters such as traction coefficients in real time. Knowing the current slip and sink rates of each wheel could be used to halt a drive, while the recognition of rock below the wheel could result in a better power distribution model to the wheels. Increased situational awareness can be beneficial for scientific yield as well. In exploratory planetary mission scenarios, monitoring terrain change can trigger bursts of remote sensing from onboard science instruments. Simultaneously, wheels that carry additional in-situ sensors may extract regolith parameters of potential scientific interest such as soil hydration and cementation. This new sensing modality has promise to support Lunar/Martian exploration at cruising speeds and to enable longer traverses.

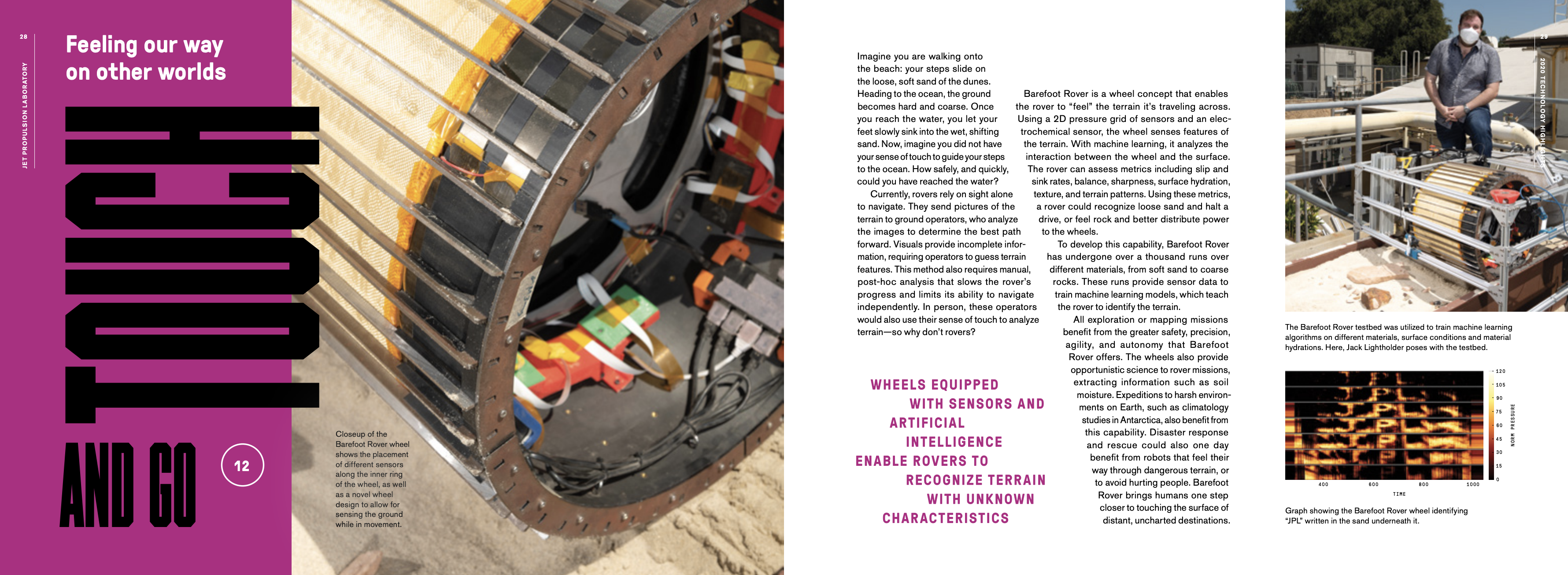

The Barefoot Rover tactile wheel carries a payload of an electrochemical impedance spectrometer (EIS), for hydration detection, and is wrapped in a 2D grid of pressure sensors, which give continual context for the pressure signature of the ground underneath the wheel. Moreover, the Barefoot Rover utilizes ML to extract information from these in-situ sensors and to train models that are capable of near real-time estimation for engineering and science. This benefits every rover that wants to understand what it is touching; direct contact information can inform mission operations path planning, enhance visual terrain mapping, provide input to agile science and serve as an important feedback loop for future autonomous systems.

The Barefoot Rover project was highlighted in the JPL 2020 Technology Highlights report! View the full report here.

Select Publications

Marchetti, Yuliya, et al. “Barefoot rover: a sensor-embedded rover wheel demonstrating in-situ engineering and science extractions using machine learning.” 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020.

Marchetti, Yuliya, et al. “Barefoot rover: a sensor-embedded rover wheel demonstrating in-situ engineering and science extractions using machine learning.” 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020.

Chen, Yuzhou, et al. “TCN: Pioneering Topological-Based Convolutional Networks for Planetary Terrain Learning.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 36. No. 11. 2022.

Chen, Yuzhou, et al. “TCN: Pioneering Topological-Based Convolutional Networks for Planetary Terrain Learning.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 36. No. 11. 2022.

Southwell, Bryan W. Terramechanics and machine learning for the characterization of terrain. MS thesis. The University of Western Ontario (Canada), 2020.

Southwell, Bryan W. Terramechanics and machine learning for the characterization of terrain. MS thesis. The University of Western Ontario (Canada), 2020.